The landscape of edge AI inference chips is undergoing a seismic shift as manufacturers race to deliver unprecedented energy efficiency. Recent benchmark results from independent testing laboratories reveal startling advancements in performance-per-watt metrics that are reshaping industry expectations and competitive dynamics.

At the heart of this revolution lies the relentless pursuit of optimal power consumption without compromising computational throughput. Leading semiconductor companies have moved beyond traditional manufacturing processes to embrace innovative architectural designs that challenge conventional wisdom. The latest testing data demonstrates that the gap between theoretical specifications and real-world performance is narrowing significantly, particularly in demanding edge deployment scenarios.

Architectural innovations have emerged as the primary differentiator in this high-stakes competition. Companies are deploying heterogeneous computing approaches that combine specialized processing units with traditional CPU and GPU architectures. These sophisticated designs enable dynamic power allocation based on workload requirements, achieving remarkable efficiency gains during complex neural network inference tasks.

The testing methodology itself has evolved to better reflect real-world conditions. Unlike previous generations of benchmarks that focused primarily on peak performance, current evaluation frameworks incorporate variable workload intensities, thermal constraints, and memory bandwidth limitations. This comprehensive approach provides a more accurate representation of how these chips perform in actual edge deployments where consistent reliability matters more than occasional bursts of maximum performance.

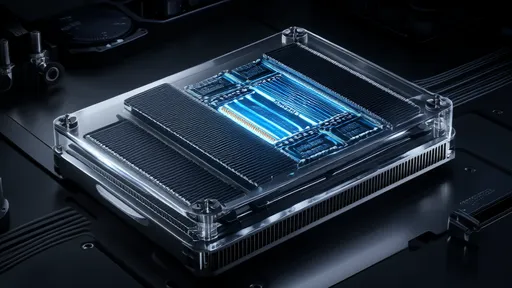

Thermal management has become a critical battleground in the efficiency race. Advanced packaging technologies and innovative cooling solutions are enabling chips to maintain peak efficiency across wider temperature ranges. The best-performing devices in recent tests demonstrated less than 5% performance degradation even when operating at elevated temperatures commonly encountered in industrial environments.

Memory architecture optimization represents another frontier in the efficiency competition. Several manufacturers have implemented sophisticated memory hierarchies that minimize data movement between different storage levels. This approach has proven particularly effective for transformer-based models and other memory-intensive AI workloads that dominate contemporary edge applications.

The impact of software optimization cannot be overstated in these benchmark results. Companies that have invested in comprehensive software ecosystems supporting their hardware are achieving significantly better results than those relying solely on hardware improvements. The synergy between specialized instruction sets and finely-tuned compiler optimizations is delivering efficiency gains that often exceed what pure hardware advancements can accomplish alone.

Industry analysts note that the convergence of these technological advancements is creating a new class of edge AI processors capable of handling increasingly complex workloads within strict power envelopes. This evolution is particularly crucial for applications in autonomous systems, smart cities, and industrial IoT where both computational performance and energy efficiency are non-negotiable requirements.

The competitive landscape continues to intensify as established semiconductor giants face challenges from agile startups specializing in AI-specific architectures. These newcomers are leveraging their focus on neural network inference to create highly optimized designs that often outperform more generalized solutions from larger companies. However, the testing results show that scale still matters when it comes to manufacturing consistency and yield rates.

Looking ahead, the benchmark results suggest that the industry is approaching fundamental physical limits in silicon-based efficiency improvements. Several companies are already investigating alternative materials and computing paradigms, including photonic computing and neuromorphic architectures, though these technologies remain several years away from commercial deployment in edge applications.

The implications of these efficiency improvements extend far beyond technical specifications. Enhanced energy efficiency directly translates to reduced operational costs, longer device lifetimes, and broader deployment possibilities in power-constrained environments. These advancements are enabling AI capabilities in scenarios previously considered impractical due to power limitations.

Regulatory considerations are beginning to influence chip design decisions as well. With increasing focus on environmental sustainability and carbon footprint reduction, manufacturers are prioritizing energy efficiency not just for competitive advantage but also for compliance with emerging standards and regulations governing electronic device efficiency.

The latest round of benchmark testing reveals that the performance gap between different manufacturers has narrowed considerably, suggesting that the industry is reaching a maturation phase where incremental improvements require increasingly sophisticated innovations. This convergence indicates that future competitive advantages may come from system-level optimization rather than component-level breakthroughs.

As the edge AI inference market continues to expand, these efficiency improvements are creating new opportunities across multiple industries. From healthcare devices that can perform complex diagnostics with minimal power consumption to agricultural sensors that can analyze crop health for entire growing seasons on single battery charges, the practical applications are virtually limitless.

The ongoing evolution of benchmarking standards themselves reflects the rapid pace of innovation in this space. Testing organizations are continuously refining their methodologies to account for new architectural features and emerging workload patterns, ensuring that evaluation results remain relevant and meaningful for developers and system integrators.

What remains clear from the latest data is that the pursuit of energy efficiency in edge AI inference represents one of the most dynamic and competitive areas in modern computing. The companies leading this charge are not only advancing the state of the art in semiconductor technology but also enabling a new generation of intelligent edge devices that will transform how we interact with and benefit from artificial intelligence in our daily lives.

By /Aug 15, 2025

By /Aug 26, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 26, 2025

By /Aug 15, 2025

By /Aug 26, 2025

By /Aug 15, 2025

By /Aug 26, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 26, 2025

By /Aug 15, 2025